DxOMark is pretty well respected, due to the care they take in analysing still and video photography. But they're treating phone cameras like DSLRs and, as such, aren't testing all the use cases and modes that people encounter out in the real world.

My evidence for this? Here are the top 18 smartphone cameras, as ranked by DxOMark for still photography:

- Sony Xperia Z5 - 88%

- Samsung Galaxy S6 Edge+ - 87%

- Samsung Galaxy Note 5 - 87%

- Nexus 6P - 86%

- LG G4 - 86%

- iPhone 6s Plus - 84%

- Samsung Galaxy Note 4 - 84%

- Sony Xperia Z3+ - 84%

- iPhone 6s - 83%

- Droid Turbo 2 - 84%

- Motorola Moto X Style - 83%

- Blackberry Priv - 82%

- Google Nexus 6 - 81%

- Nokia 808 - 81%

- OnePlus 2 - 80%

- HTC One A9 - 80%

- Samsung Galaxy S5 - 80%

- Nokia Lumia 1020 - 79%

At which point, if you've been around the world of Symbian or Windows Phone - or Nokia, generally - for a while, your jaw will drop and your opinion of DxOMark will drop faster.

Now, I should state that I too have tested most of the above smartphones and there are some cracking cameras in that bunch. And some middling units too. Yet most are much higher in the rankings than the two monster PureView devices from Nokia. How is this possible? Because DxOMark is only testing a small part of a camera's functions and performance.

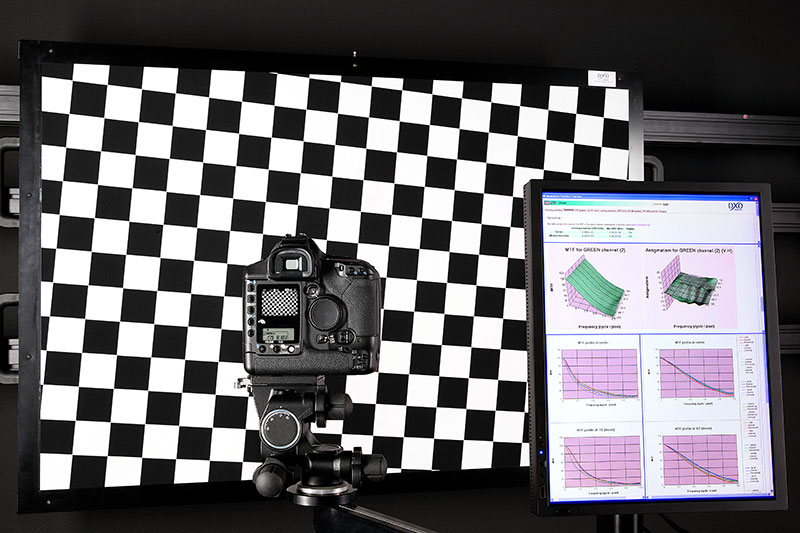

Part of DxOMark's testing rig...

Specifically, here's what's wrong with the methodology and ratings (in my opinion):

- Test photos are taken while tripod-mounted - obviously 99.9999% of real world photos by real users are taken handheld. I can appreciate why a tripod is used - to eliminate variation in hand wobble between devices, but it unfairly disadvantages all the phones with OIS (optical image stabilisation) - in this case the Lumia 1020's stabilisation works rather well and yet the OIS isn't used at all in testing.

- Flash isn't reported on in the scores. I appreciate that flash-lit shots (e.g. of pets, people) are rarely ideal and/or realistic, and that photo purists (like the DxOMark folks) avoid using flash wherever possible, but to not rate flash at all puts the two smartphone cameras with 'proper' (Xenon) flash at a huge disadvantage.

- Zooming isn't tested at all. I appreciate that this is because every phone other than the Nokia pair here can't really zoom at all, so DxOMark is using dumbed down expectations. But completely ignoring one of the core selling points of the 808 and 1020 is very disappointing.

- All tests are done with initial phone firmware - there's no concept at DxOMark in going back after a major OS/application update and checking out improved image capture. The 808 had this nailed at the outset, but the Lumia 1020 took a good year for its imaging performance to be best optimised. But none of this mattered, because all DxOMark seem to care about is rankings based on each phone's initial firmware. Probably because of the workload involved in re-doing testing, but not really fair to any phone whose camera stumbles out of the blocks but then improves markedly.

To use an analogy, if these were car tests, it would be pitching a Ford Fiesta against a Ferrari but limiting both to 30mph, second gear-only and up and down a suburban road. All out of the factory, with no chance to 'wear in'. A complete travesty, in other words.

Answering the criticisms above, in my hypothetical (or maybe I should do this?) 'realMark' rankings, I'd cover (and rate based on):

- Static scenes in good and bad lighting, but handheld, so testing stabilisation as well as light gathering abilities and resolution.

- Flash lit photos in typical indoor/evening lighting, of both static and human subjects, the latter my standard 'party' test, of course.

- Testing optical/lossless/digital zoom - this is implemented in a variety of ways in modern hardware, but is certainly worth trying out - so many shots can be instantly improved, at capture time, by zooming in, to 'crop' the field of view and make the shot more 'intimate'.

- Re-testing devices after major software udates - hey, I'm not afraid of a little hard work(!)

And I've been doing a lot of this over the years in my many camera phone head to head testing on AAS and AAWP - I seem to be the only one doing 'party' and 'zoom' testing in the tech world, sadly.

An example of the type of real world snap that is utterly ignored by DxOMark's methodology: handheld, fast moving and unpredictable subject(!), indoor lighting, etc. Somehow my Xenon-equipped Nokia didn't seem to care about its DxOMark score, it just provided me with a cracking photo! (Bonus link: the Art of Xenon)

Another shot made possible by using features not DxOMark-tested. In this case zoom, on the Nokia 808, here's Oliver's shot of his cat's eyes, with the zoom letting get incredibly 'close' (see, it's not just for distant objects!) and providing fabulous natural 'bokeh'.

DxOMark (and many other tech site) reviews tend to major on well lit static shots. I appreciate these are easiest to test, but out in the real world people like shooting animate objects in all lighting conditions and at all distances. I've seen some horrors. Some of which, at least, would have been alleviated with a little stabilisation, Xenon flash and lossless zoom...

Here then is to 'realMark'. Copyright err.... me, 2016!

PS. As a tease to my next cameraphone test, see the snap below:(!)